Powder Measure Redux Part One: The Big Scale

Since the first day I have used a powder measure to drop a powder charge, and a scale to weigh it, I was always puzzled over

one thing. The minimum graduation of the scales used in reloading is 1/10th of a grain (or approximately 6.5mg). Also, the

load data is published to 1/10th of a grain. So I can weigh something that appears to match the load data, but not knowing the

precision of the scale (which is not necessarily the same as the unit of graduation), I never had a feel for how much off I am - and

more importantly, how much variability is actually present in the charge.

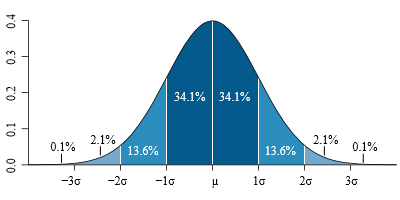

You see, when the manual says that the accuracy of the scale is +/- 0.1 grain, they probably mean standard deviation of the sampling

(at least this is the generally accepted way to publish data in science; hopefully, reloading companies follow this convention).

If this is true, this means than the actual weight has approximately 68% probability to be within 0.1 grain of what the scale shows; or

95% probability to be within 0.2 grain. So if the scale shows 38.5, the real weight of the charge is 95% likely to be from 38.3 to 38.7,

a rather wide interval. If you are doing load development, you are likely to have smaller steps in the load than this 95%-accuracy interval.

To really understand the limitations of the weighing equipment, a more precise instrument is needed, and I have been ogling analytical

scales for quite a while. Unfortunately, most of the scales with very fine resolution are rather expensive: $3000+. The accuracy I wanted

was 0.1mg at least, which is roughly 0.0015 grain. This is the standard deviation, the measurement will be extremely likely within 3 standard

deviations of the real value, or +/- 0.005gr - which makes the first two digits significant.

Eventually I found an old microbalance scale from Denver Instruments, AB-250D, on eBay for a cool sum of $950 + $60 shipping.

The scale was built in 1990s, but the seller claimed excellent condition, a pedigree in servicing analytical scales, and a 90-day

warranty, so I went for it.

The scale has two resolution modes, with the standard deviation of 0.02mg, or 0.0003 grain. The 3-sigma interval then is 0.001 grain,

so the value is somewhere within +/- 0.001 grain of the measurement; good enough.

The scale arrived a week ago; I have built it an enclosure that protects it from drafts and hazards of the reloading area. The scale

itself is rested on concrete bricks that are sitting on concrete basement floor, and is not touching the enclosure.

The most annoying feature is the stabilization time - it takes several minutes to stabilize, and the readout continues to climb

upwards for half an hour; however, it only affects thousands of a grain, so the fist two digits (tens and thousands) are still significant.

And so, here are a few experiments - and check back for more!